When to Build AI Agents vs Assistants: A Framework for Technical Leaders

When to Build AI Agents vs Assistants: A Framework for Technical Leaders

Everyone's talking about AI agents. Most companies are building the wrong architecture for their use case.

Here's the reality: 57% of companies already have AI agents in production, with another 30% actively developing them. But 65% of enterprise leaders cite agentic system complexity as their top adoption barrier for two consecutive quarters. The problem isn't capability - it's selection. Technical leaders are deploying agent architecture for workflows that need assistants, or building assistants when autonomous systems would deliver exponential returns.

The difference isn't just semantic. It's architectural, economic, and strategic. Get it wrong and you'll either cap your ROI with underbuilt systems or waste months over-engineering unnecessary complexity. Get it right and you're looking at the 62% of companies that anticipate 100% or greater ROI from their agent deployments.

The Architectural Difference

AI assistants enhance human productivity through suggestions and responses. You ask ChatGPT to draft an email. GitHub Copilot suggests code completions. Salesforce Einstein surfaces insights from your CRM data. The human stays in the loop for every decision and action.

AI agents replace entire workflows through autonomous loops. They perceive environment state, make decisions using reasoning and tools, take actions without human approval, and learn from outcomes. When QA flow analyzes a Figma design, it doesn't suggest test cases - it generates them, executes them across 2,400 test suites monthly, and automatically creates bug tickets in Jira. The human reviews results, not every step.

This architectural distinction determines everything downstream: cost structure, operational patterns, success metrics, and ROI potential. You can't retrofit an assistant into an agent later without rebuilding from scratch.

.png)

The Selection Framework

High-ROI AI agent opportunities share four characteristics. Use these as your selection criteria:

High-volume repetitive workflows. The workflow executes frequently enough to justify architectural investment. Testing 2,400 suites monthly makes autonomy worth building. Running 10 tests quarterly doesn't. If annual execution volume is under 500 cycles, start with an assistant.

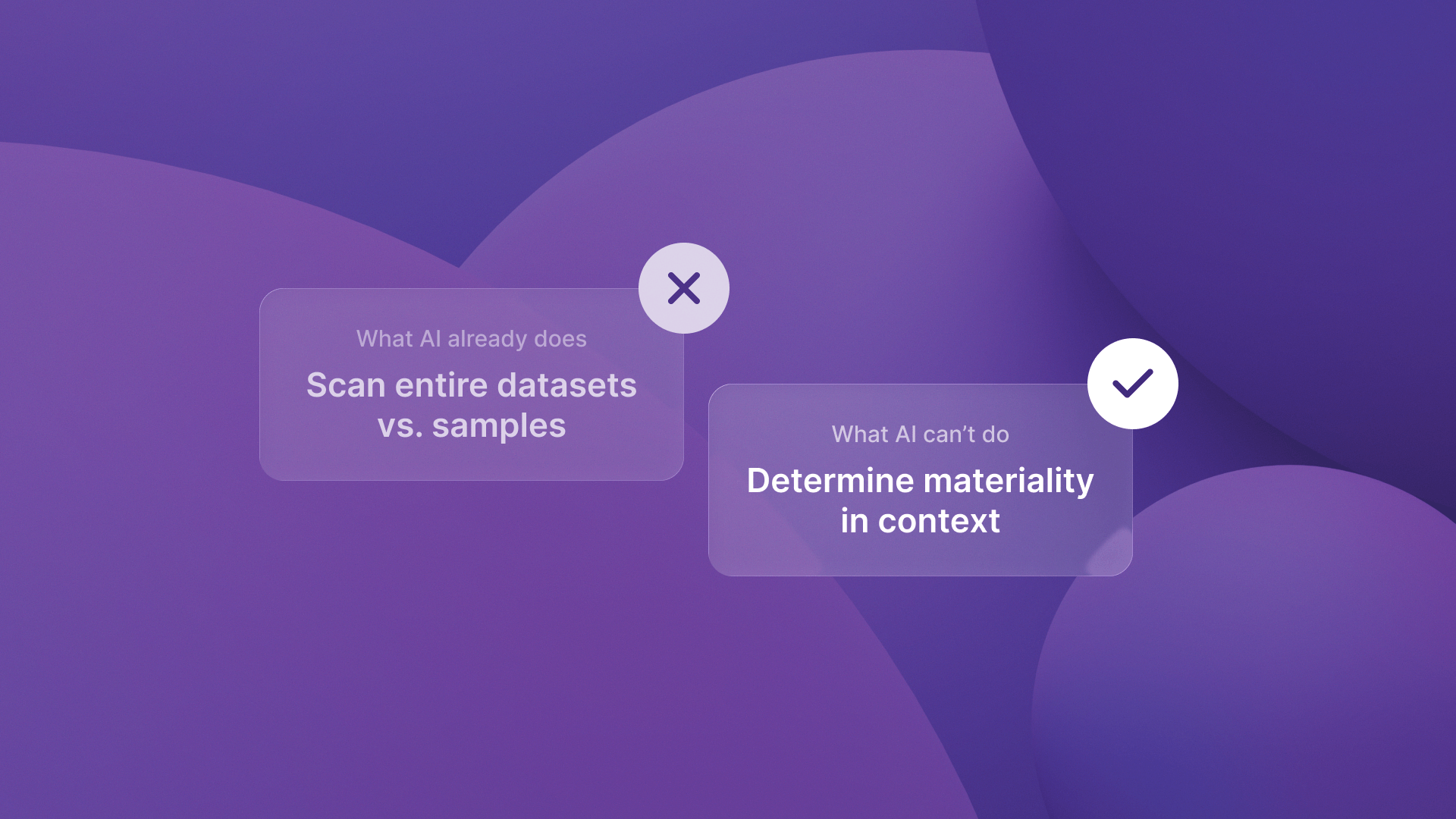

Clear success criteria. You can define "done" without ambiguity and measure quality programmatically. Bug detection has objective pass/fail criteria. Creative brainstorming doesn't. If you can't automate validation, you need human judgment - that's an assistant use case.

Structured data inputs. The agent receives consistent, parseable inputs it can reason over reliably. Figma design specs and user stories are structured. Customer support conversations are semi-structured. Pure unstructured inputs create reliability issues that cap autonomous potential.

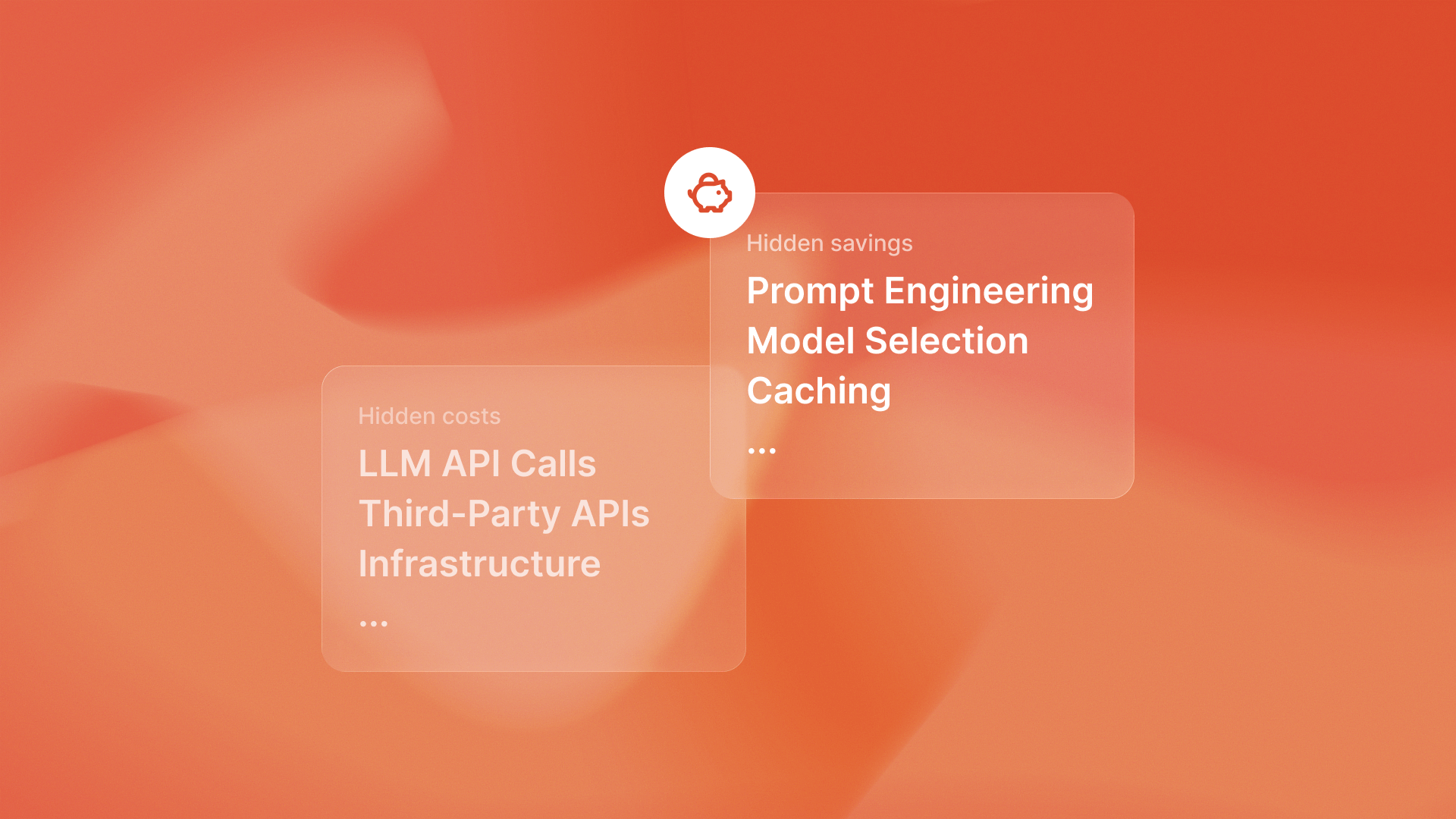

Meaningful cost of human execution. Manual workflow cost justifies agent development and infrastructure spend. When Timecapsule analyzed QA workflows, teams spent 40+ hours weekly writing test cases. That's $50,000+ annually in engineering time - plenty of ROI headroom for an autonomous system. A workflow consuming 2 hours monthly doesn't clear the bar.

What This Buys You

The companies getting this right are seeing real returns. Not hype-driven projections, but production economics that justify the architectural complexity. They're identifying workflows where autonomous loops actually make sense: compliance monitoring at Shoreline, LinkedIn campaign orchestration at Ingage, continuous QA testing at QA flow.

Meanwhile, companies chasing agent hype are discovering the 65% barrier is real. They're over-engineering workflows that needed simple automation, or under-investing in opportunities where autonomy would deliver step-function improvements.

The Strategic Stakes

The selection framework isn't about moving fast. It's about moving strategically on opportunities that justify autonomous architecture while avoiding the complexity trap on everything else.

By late 2026, the companies that mastered this distinction will have built sustainable competitive advantages through well-deployed autonomous systems. Those that chased hype or under-invested will face costly rebuilds within 12-18 months when their architectural choices hit scaling limits.

Want to learn more?

Let’s talk about what you’re building and see how we can help.

No pitches, no hard sell. Just a real conversation.

.png)

.svg)

.png)

.svg)